S3 integration

Allows you to use a S3 bucket as the backend filesystem. However, since S3 isn't hierarchical, you only get simulated folders, and no ability to rename, and some other gotchas about how S3 works. These aren't limitations we impose, but just due to the design decisions S3 made in that it's intended for static item consumption and not as a location for holding and manipulating file names.

1. Configuration.

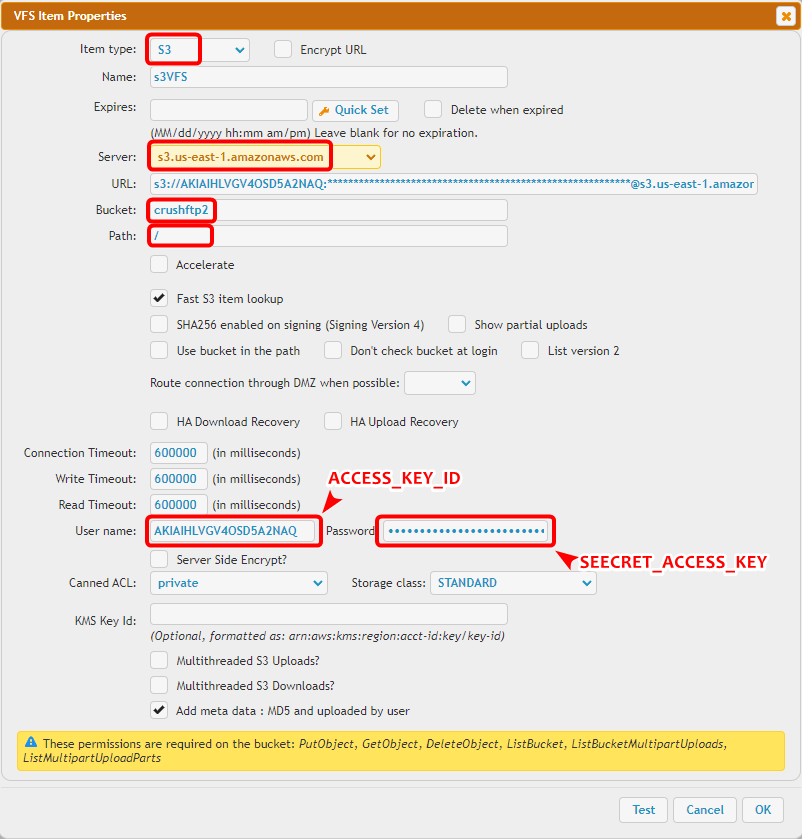

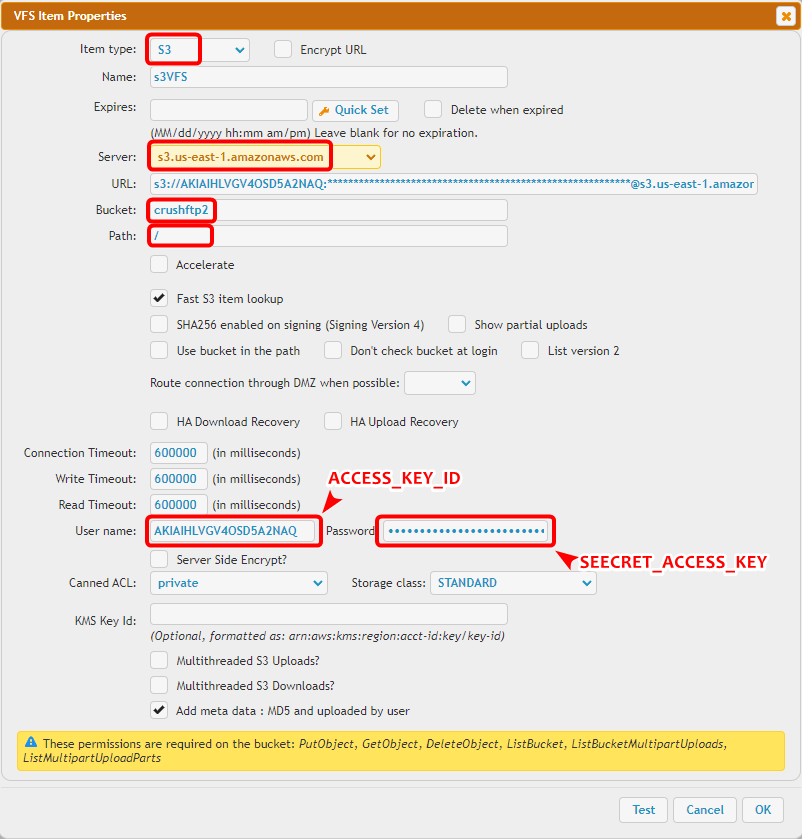

The URL should look like this (Replace the URL with your corresponding data!):

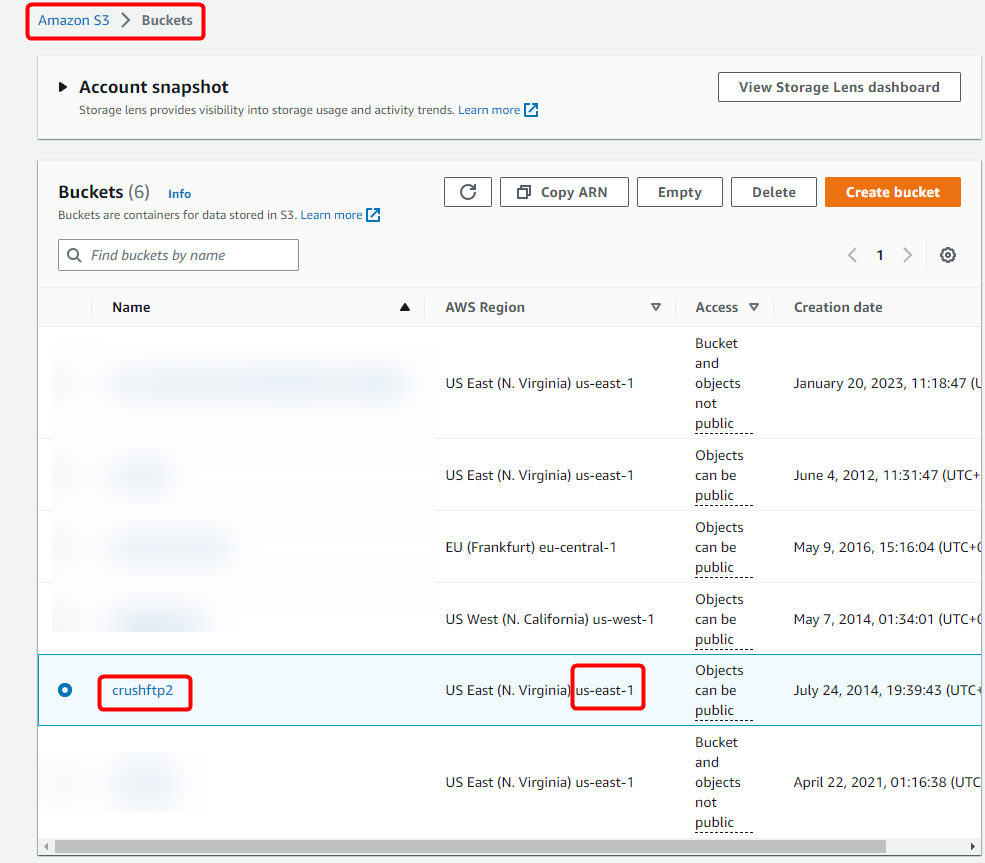

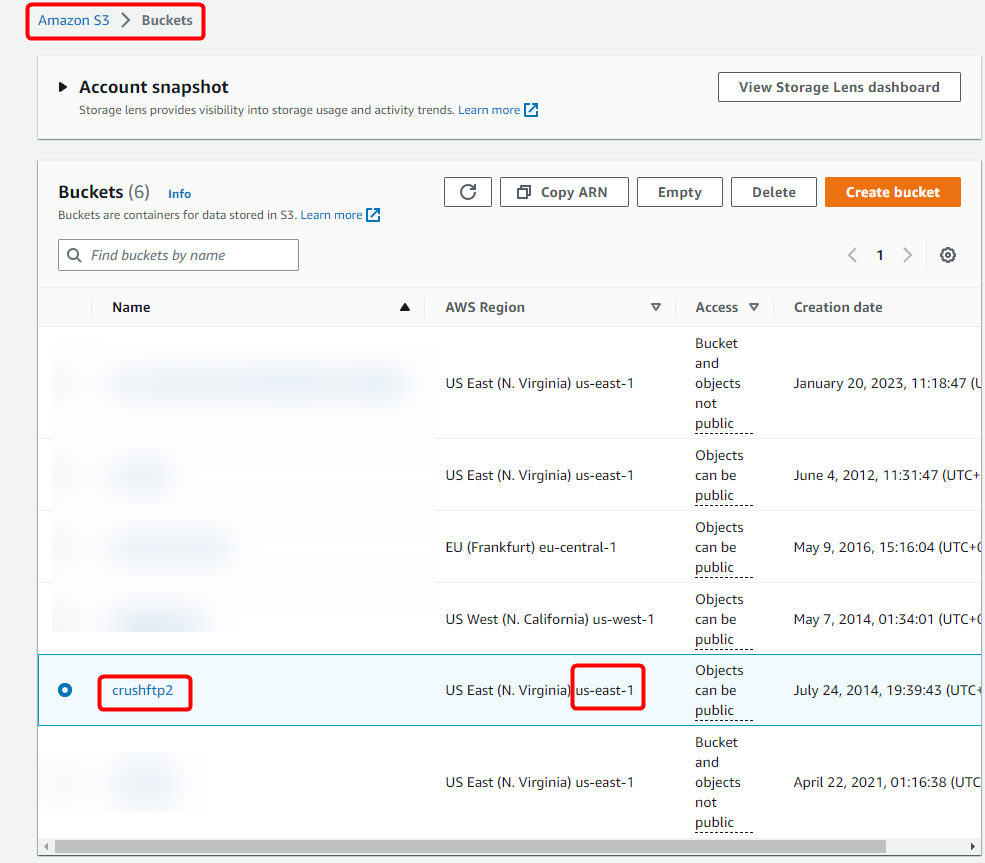

The security credentials (Access key ID and Secret Access Key) were offered to save at S3 user creation. The server and bucket information can be found on the S3 portal (at AWS site S3 -> Buckets).

Then paste them on the appropriate fields in CrushFTP.

We support the IAM auth scenario too, its just not the default mode. Setting the S3 username to "iam_lookup" and S3 password to "lookup" will use this method.

The following policy permissions are needed on S3:

2. Access other cloud storage through S3 REST API

Google Cloud -

BackBlaze(b2) -

Allows you to use a S3 bucket as the backend filesystem. However, since S3 isn't hierarchical, you only get simulated folders, and no ability to rename, and some other gotchas about how S3 works. These aren't limitations we impose, but just due to the design decisions S3 made in that it's intended for static item consumption and not as a location for holding and manipulating file names.

1. Configuration.

#

The URL should look like this (Replace the URL with your corresponding data!):

s3://ACCESS_KEY_ID:SEECRET_ACCESS_KEY@SERVER/BUCKET/

The security credentials (Access key ID and Secret Access Key) were offered to save at S3 user creation. The server and bucket information can be found on the S3 portal (at AWS site S3 -> Buckets).

Then paste them on the appropriate fields in CrushFTP.

We support the IAM auth scenario too, its just not the default mode. Setting the S3 username to "iam_lookup" and S3 password to "lookup" will use this method.

The following policy permissions are needed on S3:

"s3:GetBucketLocation", "s3:ListAllMyBuckets", "s3:ListBucket", "s3:ListBucketMultipartUploads", "s3:PutObject", "s3:AbortMultipartUpload", "s3:ListMultipartUploadParts", "s3:DeleteObject", "s3:GetObject"

2. Access other cloud storage through S3 REST API

#

Google Cloud -

BackBlaze(b2) -

Add new attachment

Only authorized users are allowed to upload new attachments.

List of attachments

| Kind | Attachment Name | Size | Version | Date Modified | Author | Change note |

|---|---|---|---|---|---|---|

png |

S3_VFS_config.png | 54.2 kB | 1 | 07-Feb-2023 03:31 | Sandor | |

png |

S3_bucket_info.png | 99.3 kB | 1 | 07-Feb-2023 03:31 | Sandor |

«

This particular version was published on 09-Oct-2023 06:19 by krivacsz.

G’day (anonymous guest)

Log in

JSPWiki